What is Dolby Atmos, DTS:X, and Immersive Audio?

Immersive Audio has made a huge splash in the industry since its release, and it’s popularity has only grown since. Initially reserved for large cinemas, Immersive Audio can now be found in common consumer and mobile devices for less than $200. What’s more, Immersive Audio has re-shaped how we experience music in both live and home settings, with a huge stream of classic albums being re-mixed for streaming services like Apple Music in Immersive formats. Furthermore, popular video streaming services like Netflix and HBO now require Immersive Audio mixes as a deliverable, making it essential for studios all over the world to update their mix rooms for Immersive Audio to stay on the cutting edge.

Westlake Pro has been at the forefront of this development, and we’ve helped studios large and small perfect their Immersive Audio setups for both Music and Post-Production.

If you’re still unfamiliar with the basics of Immersive Audio, this series will be the perfect place for you to get started. You can even schedule a private consultation with Westlake Pro to help you through the process of upgrading your studio today.

PART TWO:

Object vs. Channel

Based Audio

Immersive audio, which is sometimes called 3D audio, gives sound engineers the power to precisely place sound in 3D space. In stereo mixes, engineers simply pan the sound between the left and right channels with the twist of a knob. The final mix contains the stereo field placed in the left and right channels. If a hi-hat is panned to the left in the final mix, then the left channel will have the same relative volume of hi-hat regardless of whether it’s played back on studio monitors, home speakers, or inexpensive ear-bud headphones.

What Is Channel-Based Audio?

Stereo is referred to as a “channel-based” mix because all the sound data is mixed and permanently etched into a combination of its 2 channels. There’s no clean way, for instance, to go back after the mix was done and move that hi-hat to the right side. The only way to “move” where something is in the final mix requires going back to the multi-track files and creating a new mix, a time-consuming and sometimes expensive process. And channel-based mixes are not limited to stereo. Formats like Dolby 7.1 are still channel-based mixes, only they have more channels. Whatever sound is mixed into the right rear surround speaker of a 7.1 mix is permanently burned into that channel.

But what if there was a way to store audio elements so mixers can define the position/panning at a later date without permanently burning it into channels? This is exactly what makes immersive audio formats like Dolby Atmos and DTS:X so revolutionary.

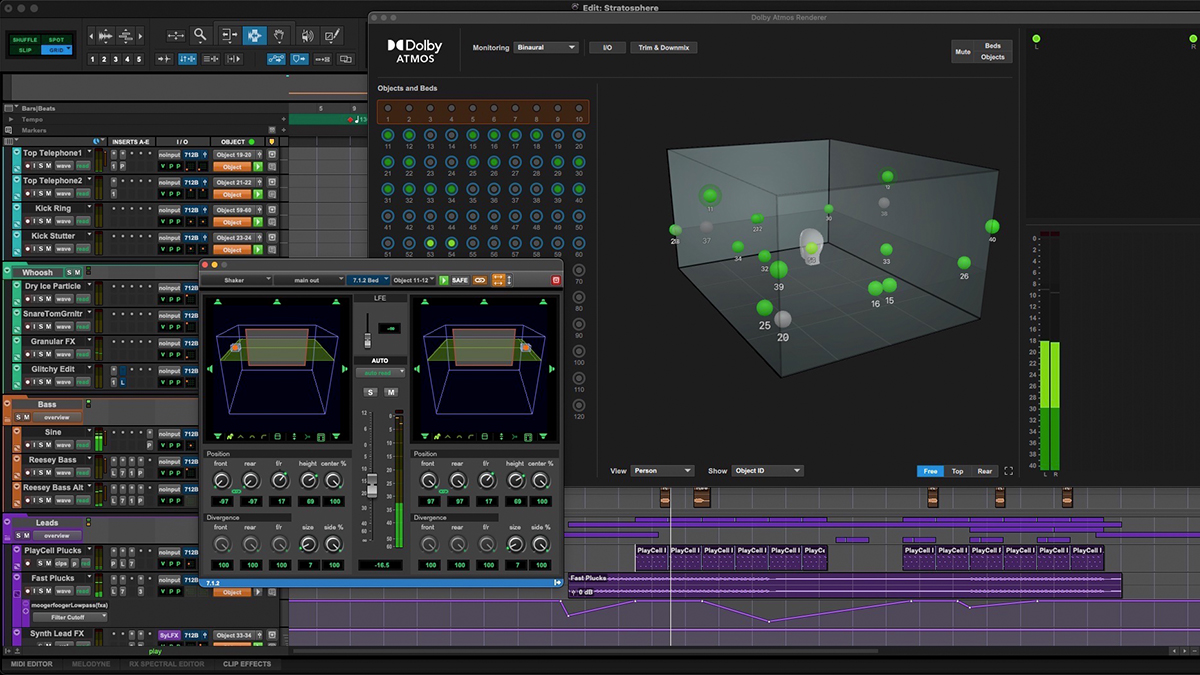

Above: A screenshot of Dolby Atmos Renderer with defined placement and panning of audio elements around the listener in a Home Theater.

What Is Object-Based Audio?

Immersive audio takes a huge leap ahead of channel-based mixing by introducing the concept of “object-based” mixing. Object-based mixing treats each sound independently of a channel, and uses metadata to indicate “where” the sound should play. This means sound data is not burned into a channel. Instead, the sound element and its associated metadata are stored together in their own sound object. When it comes time for playback, an immersive audio decoder reads the position metadata stored in the object and plays its sound element accordingly. The sound decoder can make individual adjustments to each discrete sound object to account for whatever speaker setup is being used, such as for 7.1.4 Dolby Atmos theaters, sound bars or headphones. Let’s use an example.

In the wild

Imagine a movie with a helicopter that is hovering overhead. It makes sense that much of the helicopter sound would be coming from the 4 overhead speakers in 7.1.4 Dolby Atmos theaters. If it were a 7.1.4 channel-based mix, then most of the helicopter sound would be burned into the 4 top speaker channels. Next, if the helicopter flies forward, its sound would pan towards the front top speakers. In a channel-based mix this panning forward would be permanent. For instance, playing the same 7.1.4 channel-based mix on headphones will not sound as intended.

truly a flexible workflow

With the object-based model of immersive sound, the sound of the helicopter rotor would be stored digitally, but not burned into any specific channel. Instead, the helicopter is stored as a discrete sound element, and its metadata will indicate its position in the virtual 3D space of the theater. When the helicopter scene plays, the Atmos or DTS:X decoder reads the metadata to determine the X, Y, and Z coordinates for the rotor sound. It then plays the sound based on where the helicopter should be, not just how loud it should be.

And since the sound is not burned into a particular channel, it opens up many possibilities for how it can be played back while also making life easier for sound engineers.

Above: An Immersive Audio set up at Inventure Studios designed and installed by Westlake’s Pros.

In The Way Of Creativity

With channel-based formats, mixers need to create and optimize separate mixes for different speaker configurations. They typically start by completing a full 7.1 mix, then they have to go back and do another mix stepped down to 5.1, and repeat the process for whatever other mixes they need: 4.0, 2.0, etc. Not only is this time consuming for the mixer to do, but it also removes much of their creative control over the playback. Of course it will sound perfect in a 7.1 surround sound theater, but how will it sound in your home, or your headphones? Immersive, object-based mixing with platforms like Dolby Atmos and DTS:X solves both of these challenges.

streamlining the process

First, immersive audio only requires one master mix, so there’s no need for separate mixes for 7.1, 5.1, etc. Needless to say, this will save a huge amount of time and effort for engineers.

metadata doing the heavy lifting

Second, software like Dolby Atmos and DTS:X handles all the positioning using object-based metadata. This ensures the mix will sound as intended, regardless of whether it’s in a 7.1.4 stadium theater, a 5.1.2 home theater system, a consumer sound bar, or headphones. The immersive audio decoder automatically places the discrete sound elements based on object metadata, so the mix will always sound as intended. Stuart Bowling, Director, Content and Creative Relations at Dolby Labs describes immersive audio as, “Author once, optimize anywhere.”

Take a look at the following Atmos configurations using a 5.1.2 speaker setup and a soundbar. Both come from the same master mix, but with Atmos adjusting the playback based on each speaker configuration. Also notice the incorporation of the ceiling (z-space) for the immersive, Ambisonic audio effect.

Dolby Atmos 5.1.2 home theater with 2 ceiling mounted speakers and rear speakers bouncing to ceiling.

A Dolby Atmos setup using a sound bar to deflect z-space audio elements from ceiling.

In the final installment of our three-part blog series on immersive audio we’ll walk you through the different platforms for object-based audio; Dolby Atmos and DTS: X, and show you how you can use the Dolby Atmos Mastering Suite and DTS: X Creator Suite to create truly immersive mixes. Stay tuned!

Stay tuned for more of this series on Dolby Atmos, DTS:X and Immersive Audio.

See More on Immersive Audio

Essential Tools for Immersive Audio

-

Avid MTRX Studio 64-channel Pro Tools Interface

$4,999.00Add to WishlistAlready In WishlistAdd to cartAdd to Wishlist -

-

JBL 705P 5-in 2-way Master Reference Monitor

$1,199.00Add to WishlistAlready In WishlistAdd to cartAdd to Wishlist -

Avid S6 Modular Control Surface

Call for PriceAdd to WishlistAlready In WishlistCall for PriceAdd to Wishlist

Have Questions?

Our Pros are glad to answer any question you have about Dolby Atmos or DTS:X.

You can visit our Contact Page to give them a call or

Send an email to our team of Professionals here.